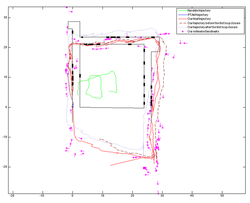

Parallel Tracking and Mapping with Odometry Fusion for MAV Navigation (2013, 2014)

click to enlarge

Duy and I continued to work on the "Autonomous Flight in Man-made Environments" project. We parallelized the SLAM module using Wall-Floor Intersection Features (splitting it into a parallel tracker and a mapper), and fused in odometry information to improve the robustness of the system in feature-lacking environments.

IROS WS Paper (2013): http://rpg.ifi.uzh.ch/docs/IROS13workshop/Nguyen.pdf

RAS Paper (2014): http://www.sciencedirect.com/science/article/pii/S0921889014000542

IROS WS Paper (2013): http://rpg.ifi.uzh.ch/docs/IROS13workshop/Nguyen.pdf

RAS Paper (2014): http://www.sciencedirect.com/science/article/pii/S0921889014000542

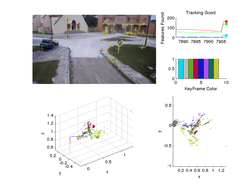

Monocular Visual SLAM for MAVs (2012)

click to enlarge

I've been spending most of my efforts developing a monocular visual SLAM system for MAVs.

My current system uses GTSAM as the back-end inference engine and runs in real-time (minus the slow MATLAB GUI). Here are some results with no distribution of computation yet:

Video (Camp Lejeune): http://youtu.be/Eqe2wH_WhPA

Video (Our flying arena): http://youtu.be/KzQbLrRNR2M

Fun experiment running the system using only a single pyramid level.

Level 1 (finest): http://youtu.be/uiuZb0Moghw Level 2: http://youtu.be/T76PculaU_Y

Level 3: http://youtu.be/CyyClanjfho Level 4 (coarsest): not enough features

My current system uses GTSAM as the back-end inference engine and runs in real-time (minus the slow MATLAB GUI). Here are some results with no distribution of computation yet:

Video (Camp Lejeune): http://youtu.be/Eqe2wH_WhPA

Video (Our flying arena): http://youtu.be/KzQbLrRNR2M

Fun experiment running the system using only a single pyramid level.

Level 1 (finest): http://youtu.be/uiuZb0Moghw Level 2: http://youtu.be/T76PculaU_Y

Level 3: http://youtu.be/CyyClanjfho Level 4 (coarsest): not enough features

Autonomous Flight in Man-made Environments (2012)

click to enlarge

I completed this work in collaboration with Duy-Ngyuen Ta and Prof. Frank Dellaert.

We proposed a solution toward the problem of autonomous flight and exploration in man-made indoor environments with a micro aerial vehicle (MAV), using only a frontal camera. We presented a general method to detect and steer an MAV toward distant features that we call vistas while building a map of the environment to detect unexplored regions. Our method enabled autonomous exploration capabilities while working flawlessly in textureless indoor environments that are challenging for traditional monocular SLAM approaches. We overcame the difficulties faced by traditional approaches with Wall-Floor Intersection Features, a novel type of low-dimensional landmarks that are specifically designed for man-made environments to capture the geometric structure of the scene.

We demonstrated our results on a small, commercially available AR.Drone quad-rotor platform.

I presented this work during an IROS 2012 workshop on visual control of mobile robots.

Video: http://youtu.be/x8oyld2m9Cw

Paper: http://www.cc.gatech.edu/~dellaert/pubs/Ok12vicomor.pdf

We proposed a solution toward the problem of autonomous flight and exploration in man-made indoor environments with a micro aerial vehicle (MAV), using only a frontal camera. We presented a general method to detect and steer an MAV toward distant features that we call vistas while building a map of the environment to detect unexplored regions. Our method enabled autonomous exploration capabilities while working flawlessly in textureless indoor environments that are challenging for traditional monocular SLAM approaches. We overcame the difficulties faced by traditional approaches with Wall-Floor Intersection Features, a novel type of low-dimensional landmarks that are specifically designed for man-made environments to capture the geometric structure of the scene.

We demonstrated our results on a small, commercially available AR.Drone quad-rotor platform.

I presented this work during an IROS 2012 workshop on visual control of mobile robots.

Video: http://youtu.be/x8oyld2m9Cw

Paper: http://www.cc.gatech.edu/~dellaert/pubs/Ok12vicomor.pdf

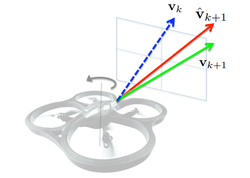

Attitude Heading Reference System with Rotation-Aiding Visual Landmarks (2012)

I worked on this project with Chris Beall, Duy-Nguyen Ta, and Prof. Frank Dellaert. We presented a novel vision-aided attitude heading reference system for micro aerial vehicles (MAVs) and other mobile platforms, which does not rely on known landmark locations or full 3D map estimation as is common in the literature.

We extended vistas (rotation-aiding landmarks from the above project) and used it as a bearing measurement to aid the vehicle’s heading estimate and allow for long-term operation while correcting for sensor drift.

Paper: http://www.cc.gatech.edu/~dellaert/pubs/Beall12fusion.pdf

We extended vistas (rotation-aiding landmarks from the above project) and used it as a bearing measurement to aid the vehicle’s heading estimate and allow for long-term operation while correcting for sensor drift.

Paper: http://www.cc.gatech.edu/~dellaert/pubs/Beall12fusion.pdf

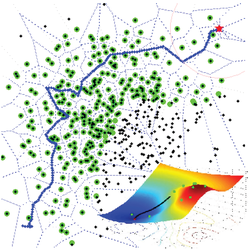

Path Planning with Uncertainty (2012)

click to enlarge

I started this project as a class project for the Robot Intelligence: Planning course with Prof. Mike Stilman.

Our team (Sameer Ansari, Billy Gallagher, William Sica, and myself) wanted a complete and optimal planner that could deal with uncertainty in obstacles in the environment.

We ended up developing a two-level path planning algorithm that could deal with map uncertainty.

The algorithm is a fusion of Voronoi diagrams and potential fields, in which the higher level planner using Voronoi diagrams guarantees a connected graph from the source to the goal if a collision-free path exists. The lower level planner uses uncertainty in the obstacles to assign repulsive forces based on the distance to the robot and their positional uncertainty. Thus, the attractive force from the Voronoi nodes and the repulsive forces from the uncertainty-biased potential fields form a hybrid planner we call Voronoi Uncertainty Fields.

Video: http://youtu.be/o_WGAgYeSao

Paper: https://borg.cc.gatech.edu/sites/edu.borg/files/papers/Ok13icra.pdf

Our team (Sameer Ansari, Billy Gallagher, William Sica, and myself) wanted a complete and optimal planner that could deal with uncertainty in obstacles in the environment.

We ended up developing a two-level path planning algorithm that could deal with map uncertainty.

The algorithm is a fusion of Voronoi diagrams and potential fields, in which the higher level planner using Voronoi diagrams guarantees a connected graph from the source to the goal if a collision-free path exists. The lower level planner uses uncertainty in the obstacles to assign repulsive forces based on the distance to the robot and their positional uncertainty. Thus, the attractive force from the Voronoi nodes and the repulsive forces from the uncertainty-biased potential fields form a hybrid planner we call Voronoi Uncertainty Fields.

Video: http://youtu.be/o_WGAgYeSao

Paper: https://borg.cc.gatech.edu/sites/edu.borg/files/papers/Ok13icra.pdf

Hacking the AR.Drone (2012)

click to enlarge

[UPDATE 2013.06.19] With the AR.Drone 2.0 and the newest firmware (make sure to update), the synchronization problem is fixed. You can get the timestamp for the frames in the PaVE struct.

Hacking the Parrot AR. Drone was my primary focus when I first came to Georgia Tech.

Using Parrot's stock firmware, we weren't able to get proper synchronization between camera frames and the IMU measurements. To solve this problem, I developed a method to "hijack" the frontal camera and stream down camera frames with JPEG compression and properly synchronized time-stamps. This method improved the performance of our algorithms.

Ever since, I've been working on a few AR.Drone "hacking" projects. I developed a custom on-board client to interact with the on-board server firmware, and a method to preload V4L2 functions to hijack the camera buffers without disturbing the drone's operations.

Hacking the Parrot AR. Drone was my primary focus when I first came to Georgia Tech.

Using Parrot's stock firmware, we weren't able to get proper synchronization between camera frames and the IMU measurements. To solve this problem, I developed a method to "hijack" the frontal camera and stream down camera frames with JPEG compression and properly synchronized time-stamps. This method improved the performance of our algorithms.

Ever since, I've been working on a few AR.Drone "hacking" projects. I developed a custom on-board client to interact with the on-board server firmware, and a method to preload V4L2 functions to hijack the camera buffers without disturbing the drone's operations.

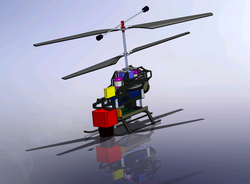

Vision-based Autonomous Helicopter (2011)

click to enlarge

This project was for the University of Waterloo Micro Aerial Vehicles (UWMAV) team.

We housed our autopilot PCB along with a Pointgrey FireFly camera on a modified LAMA 4000 co-axial helicopter. The helicopter was upgraded with aftermarket parts and custom machined body.

The SolidWorks drawing shows the helicopter with our PCB in green and the camera in red.

We were able to control the helicopter over Gumstix' WiFi, stream camera frames, and use an IMU and optical flow from bottom facing camera to estimate the vehicle state.

We participated in the IMAV 2011 competition in Alabama with this helicopter.

We housed our autopilot PCB along with a Pointgrey FireFly camera on a modified LAMA 4000 co-axial helicopter. The helicopter was upgraded with aftermarket parts and custom machined body.

The SolidWorks drawing shows the helicopter with our PCB in green and the camera in red.

We were able to control the helicopter over Gumstix' WiFi, stream camera frames, and use an IMU and optical flow from bottom facing camera to estimate the vehicle state.

We participated in the IMAV 2011 competition in Alabama with this helicopter.

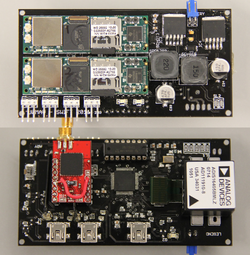

Designing Autopilot PCB for MAVs (2011)

click to enlarge

This project was for the UWMAV team at the University of Waterloo.

Two members (Jinsuk Seo and Mohammed Ibrahim) and myself developed a stand-alone PCB for general usage in controlling MAVs. This PCB was meant to be a plug-and-play solution to controlling any MAVs, independent of their type (fixed-wing, quadrotor, flapping-wing, etc).

The PCB hosted two Gumstix computer-on-modules (one for vision processing, and the other for state estimation / filtering), a switching power circuitry, 8 PWM motor outputs, an Analog Devices IMU, a GPS, and a low-level micro-controller for real-time controlling purposes.

The PCB was designed, populated, and tested by our team and later used in our vision-based autonomous helicopter project.

Later I received the ASME - Northern Alberta Design Challenge Award for this work.

Two members (Jinsuk Seo and Mohammed Ibrahim) and myself developed a stand-alone PCB for general usage in controlling MAVs. This PCB was meant to be a plug-and-play solution to controlling any MAVs, independent of their type (fixed-wing, quadrotor, flapping-wing, etc).

The PCB hosted two Gumstix computer-on-modules (one for vision processing, and the other for state estimation / filtering), a switching power circuitry, 8 PWM motor outputs, an Analog Devices IMU, a GPS, and a low-level micro-controller for real-time controlling purposes.

The PCB was designed, populated, and tested by our team and later used in our vision-based autonomous helicopter project.

Later I received the ASME - Northern Alberta Design Challenge Award for this work.

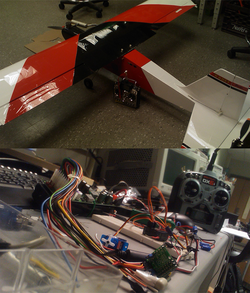

Autopilot System for Fixed-wing MAV (2010)

click to enlarge

This project was done during the internship at the University of Waterloo Autonomous Vehicles Laboratory (WAVE) under Prof. Steven Waslander's advising.

In order to assist with research in aggressive trajectory tracking for fixed-wing MAVs, I developed both the hardware and the software for an autonomous fixed-wing platform.

I put together air speed sensors, IMU, GPS, motor controllers, radio receiver, safety system, and a PC104 board running QNX operating system to interface with all the sensors and command desired motor PWMs.

The idea was to develop a fully functional fixed-wing platform that could take off as soon as the trajectory tracking control laws were developed.

In order to assist with research in aggressive trajectory tracking for fixed-wing MAVs, I developed both the hardware and the software for an autonomous fixed-wing platform.

I put together air speed sensors, IMU, GPS, motor controllers, radio receiver, safety system, and a PC104 board running QNX operating system to interface with all the sensors and command desired motor PWMs.

The idea was to develop a fully functional fixed-wing platform that could take off as soon as the trajectory tracking control laws were developed.

Ground Station for Displaying MAV Sensor Data (2010)

click to enlarge

This ground station was developed during the internship at the WAVE lab, to serve as a generic method to view MAV sensor data in real-time.

Top right shows a GPS image of the current area actively pulled from Google Maps. The bottom sensors show yaw heading, roll/pitch horizon sensor, altitude sensor, and air speed sensor in order.

The GUI was designed in MFC with C++ back-end, and all sensors seamlessly displayed the sensor data streamed down to the ground in real-time.

The ground station was later used with the aforementioned fixed-wing MAV to visualize the flight data.

Top right shows a GPS image of the current area actively pulled from Google Maps. The bottom sensors show yaw heading, roll/pitch horizon sensor, altitude sensor, and air speed sensor in order.

The GUI was designed in MFC with C++ back-end, and all sensors seamlessly displayed the sensor data streamed down to the ground in real-time.

The ground station was later used with the aforementioned fixed-wing MAV to visualize the flight data.

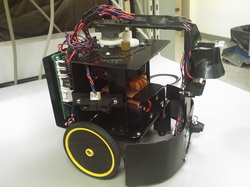

Fire Fighting Robot (2009)

click to enlarge

This is a fire fighting robot that was built as a class project.

With Jinsuk Seo, Mohammed Ibrahim, and Sean Anderson, we machined and programmed a fire fighting robot that could autonomously detect candles with IR sensors and blow them out while navigating around a small environment with walls and floor markers.

We implemented a simple PID controller on the side-facing sonar sensors to keep a fixed distance away from the walls. Check out the youtube video in action! (Thanks Sean for the upload)

With Jinsuk Seo, Mohammed Ibrahim, and Sean Anderson, we machined and programmed a fire fighting robot that could autonomously detect candles with IR sensors and blow them out while navigating around a small environment with walls and floor markers.

We implemented a simple PID controller on the side-facing sonar sensors to keep a fixed distance away from the walls. Check out the youtube video in action! (Thanks Sean for the upload)

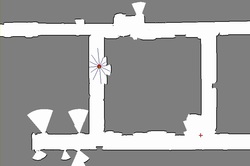

Benchmarking Local Path Planners (2009)

click to enlarge

This project was done during the internship at Robotics Laboratory in POSTECH under Prof. Wan Kyun Chung's advising. The goal of the project was to simulate and compare different local path planners to benchmark their performance.

The robot, simulated to be equipped with a ring of sonar sensors and odometers, was given a start and a goal in office-like environments. The framework was developed in C++ with the GUI front-end generated using MFC. The red circle shows the robot position with green indicating its heading and the blue rays are the current sonar readings.

I was able to successfully benchmark Dynamic Window Approach, Vector Field Histogram, and Nearest Voronoi Diagram based on their average completion time.

The robot, simulated to be equipped with a ring of sonar sensors and odometers, was given a start and a goal in office-like environments. The framework was developed in C++ with the GUI front-end generated using MFC. The red circle shows the robot position with green indicating its heading and the blue rays are the current sonar readings.

I was able to successfully benchmark Dynamic Window Approach, Vector Field Histogram, and Nearest Voronoi Diagram based on their average completion time.

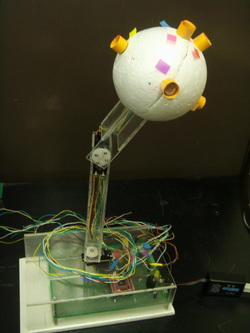

Sunflower Bot (2008)

click to enlarge

It is still unclear to me whether I should be proud of this work or ashamed of it.

In any case, this was my first robot (that I built for fun) when I got my hands on an AVR ATMega 128.

The idea was to have photo-sensitive sensors on its spherical head and follow the sun around like a sunflower. I even made little yellow leaves to put on the robot, but was too embarrassed to use them.

The robot used a servo motor for each of its two joints and really long wires because I could not figure out how to untangle the wire.

I wanted to give this to my grandmother as a gift, but could never get it to track the sun properly.

In any case, this was my first robot (that I built for fun) when I got my hands on an AVR ATMega 128.

The idea was to have photo-sensitive sensors on its spherical head and follow the sun around like a sunflower. I even made little yellow leaves to put on the robot, but was too embarrassed to use them.

The robot used a servo motor for each of its two joints and really long wires because I could not figure out how to untangle the wire.

I wanted to give this to my grandmother as a gift, but could never get it to track the sun properly.